Data Lake Architecture: 6 Key Design Considerations

Large numbers of companies, such as trade businesses, user banks, commercial airlines, and insurance policy companies are joining the blast to established data lakes for engaging petabytes of secure information and logs. But several executives and architects adopt that once they complete setting up log sources, offering parsers, and deploy their SOC analysts with information, their data lake will deliver the properties.

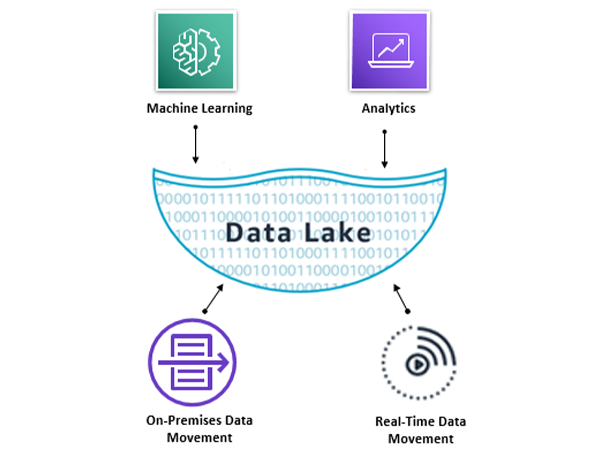

What is a data lake?

A data lake is a central repository that permits you to store all your proper manner or un proper manner data at any point. You can store your data without having to first assembly data, and execute different kinds of analytics from consoles and visualizations to big data processing, real-time analytics, and machine learning to lead to better choices.

1. keep long-term and design for growth

The business grows of your production surroundings compatibly growth in scale, variability, and complication. Your log capacity will expand and want to add logs from innovative applications and infrastructure that never online when you primarily considered your data lake. In choosing a manner that can raise with your commercial, you want a structure that lets you scale in an expectable and cost-efficient way. Using Elasticsearch to control its operations, Exabeam Data Lake suggests flexibility. It simply scales without re-architect the infrastructure.

2. Identify all your data sources

Don’t make the faults of keeping over the Actions Per Second or Day-to-day Log Volume from your present system. You might catch from an internal team how your present system consumes only a portion of your logs on a discriminating basis because Vendor X bills us incrementally by log volume. But the Exabeam Data Lake pricing model removes that anxiety so classifies all of your different data sources as network devices, firewalls, Windows, emails, applications, and more. Identify the data sources that produce a variable volume of logs and are susceptible to points due to traffic capacity, seasonality, and other explanations.

Understand diverse log formats and the proportion of organized vs unorganized data. This will assist strategy and formulate parsing necessities before you start a deployment. The Exabeam Data Lake, you should not want to write regular expressions to parse your data, we deliver hundreds of out-of-the-box parsers to help you make logic of your security logs. But suppose you have a new one, we’ll rapidly build for you easy manner.

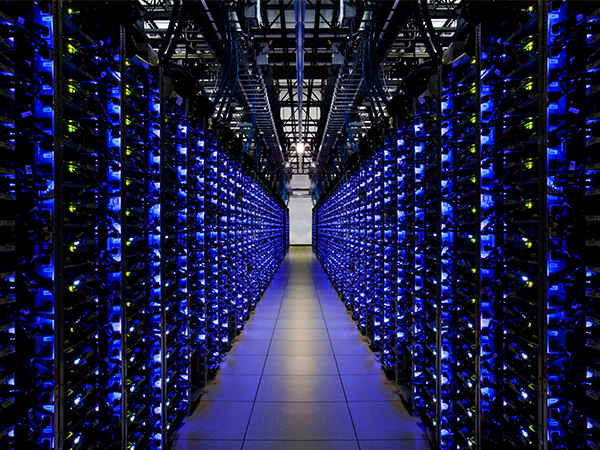

3. Decoupling storage from compute

Research from Forrester evaluations that seventy percent of data collected by establishments is unemployed for business intelligence (BI) and analytics. So, a data lake architecture uniting to compute and storing spends on calculate capacity from utilized. By the way, by decoupling storage from computing, data teams will naturally and economically gauge storage to costume the proliferation of data collections.

4. Security

Moving to any cloud-based distribution, security for a data lake is the most precedence. Approximately talking, the three fields of security applicable to a data lake in the cloud system are encrypt the data, network layer security, and accessing control. Encryption for warehoused data is important, but these types of data that are not widely accessible. Encryption in transport is another important consideration. Usually, this is organized using built-in decisions for every service or through TLS/SSL with their connected certificates.

Network-level security should be dependable with an administration’s overall security context, though it plays a serious part in applying a strong defense approach by rejecting wrong access at the network level. Verification and authorization are the main focus key areas of access control.

5. Recovery

Recovery characteristically mentions usual policies and actions that restore operations and mission-critical system accessibility. Banks and other controlled industries necessitate detailed tragedy recovery mechanisms. But each occupation must regulate the level of automation and superior it needs for itself. Some teams function mirror sites with full duplication of logs, contexed data, and user definite data; this can become very complex and costly.

6. Metadata storage

A data lake design must integrate a metadata database functionality to enhance users to explore and learn about the data collections in the lake. Many of the vital principles to keep in mind to enhance metadata is formatted and managed are applying a metadata obligation and automating the formation of metadata.

Conclusion

A data lake does suggest some crucial advantages as it delivers faster inquiry results at the low-cost database, sustained structured, semi-structured, and unstructured data and etc. It’s important because those establishments develop a strong data lake architecture to encounter enterprise extensive analytical requirements.